Using edge technologies like Kubernetes pushes our engineers into technical challenges every day.

We face the complexities and uncertainty of multiple components stacked onto each other, each one with fast release cycles and thus prone to bugs introduced from version to version.

In such scenario there is a great value in the history that brought the software to the current point: knowing the decisions taken in the past by the community can help debug present issues and possibly save you a headache.

TL;DR

If you have Kubernetes and ingress-nginx at latest version (0.24.1 at the time of writing) you are likely to suffer a 3-years-old bug that has been reintroduced in version 0.22.0.

The issue concerns the balancing type round_robin, the default config for the ingress, and causes applications immediately behind the ingress to have an imbalanced traffic against a subset of pods instead of an evenly distributed traffic; configure the ingress to use to the ewma algorithm to address this behaviour.

To read more see the repo issue.

The long story

lastminute.com has been pioneering the adoption of Kubernetes and has been active in the community since the very beginning. In 2016 our Platform team discovered and reported an issue to the ingress-nginx maintainers regarding a problem with balancing of incoming requests. In the data recorded at that time (with ingress version 0.8) we noticed the list of IPs used by multiple ingress pods was exactly the same because it was sorted; this meant that all the first request of every ingress instance was routed to the same backend pod. This is not a problem with a small ingress deployment but can be dangerous increasing the ingress replicas because you risk to overload an application tuned for a certain QPS value. The bug was patched internally and unfortunately we were not able to contribute upstream, but it was eventually patched in the official repo too. Last week, one of our product development teams, reported instability in an application during deployment: we use canary releases and each application lives in a dedicated namespace, and when the canary was starting to roll out, the stable release pods were having higher CPU load and the GC and response times were growing; after some minutes the situation was back to normal. We started our investigation and found the problem a couple of hours later: the instability was due to a higher traffic delivered to only a subset of pods in the stable deployment! The ingress was not forwarding an equal number of requests to all pods but it was instead sending more requests to some pods and less to others… Blast from the past! The ingress bug had returned 3 years and 16 versions later!

Discovering

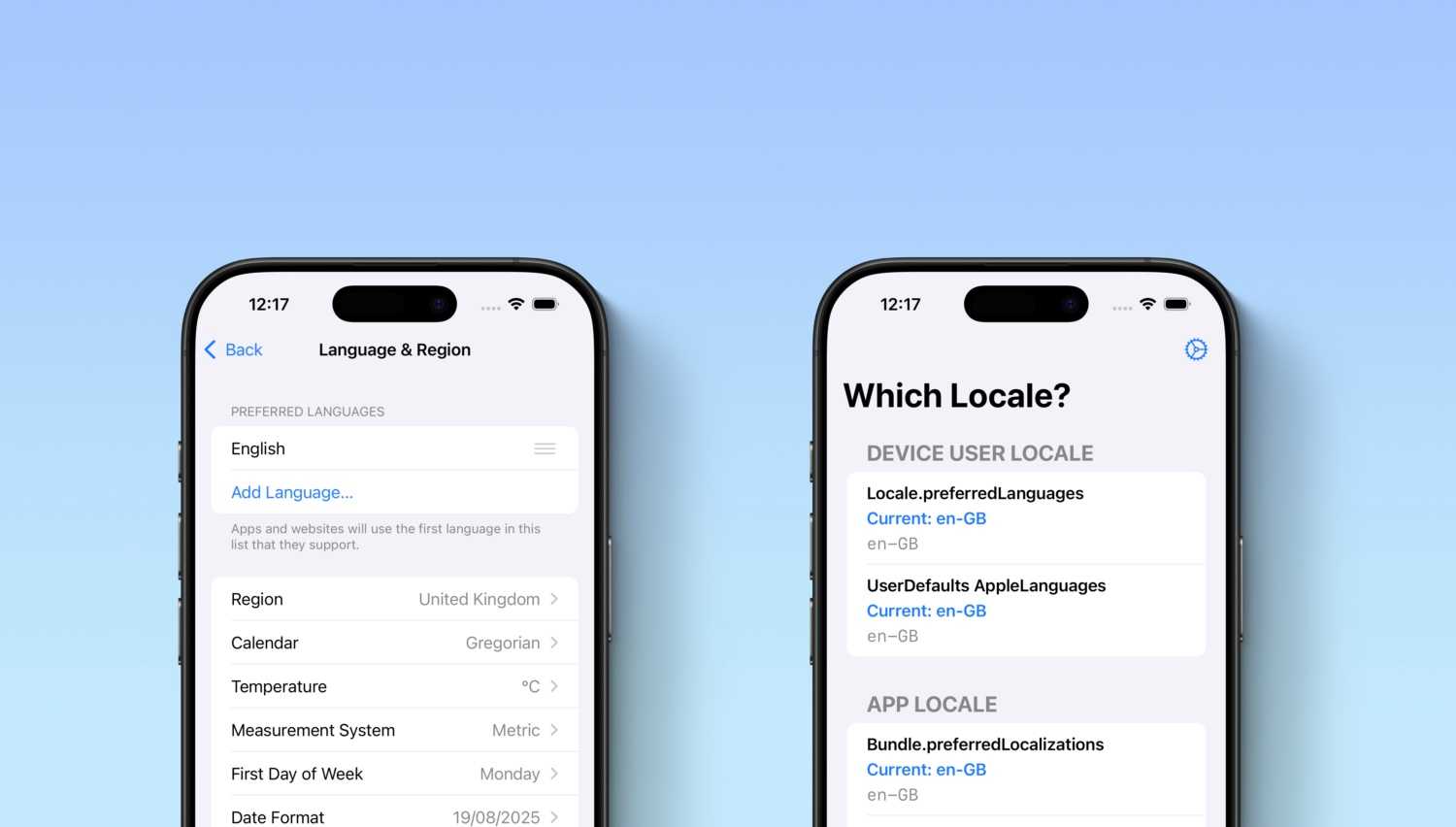

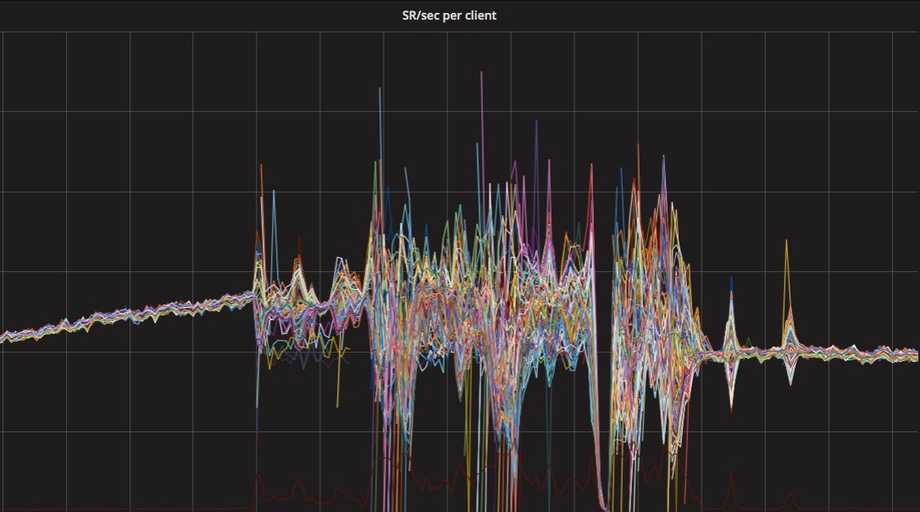

The following graph represents the input of our investigation: each line is the number of requests per seconds handled by a pod behind the same ingress rule, a publicly reachable service in our production cluster.

The graph shows that the normal behaviour is a uniform number of requests per pod but at a certain moment the deviation of requests per pod starts to increase: some pods gets more requests, some less; the turbulence goes on and amplifies further for some minutes, then the service recovers.

We were able to match the moment where the requests starts to differ with the start of a canary release for the application and we started to wonder why the canary pods should have any effect on the requests received by other pods; the only correlations between canary and regular pods are the Service and the Ingress resources.

When canary pods are added to a deployment the Endpoints object list is updated by the deployment controller and this generates 2 events:

- the iptables rules are updated by

kube-proxywith the list of Pod IPs behind aService - the NGINX upstream configuration changes, updating the Pod IPs behind a

Service

Reminding that a similar issue has happened before we ditched the iptables hypothesis and started looking into the NGINX ingress controller: what we found was that since version 0.22.0 the way of distributing requests across backends had been changed introducing a Lua script to perform balancing. When NGINX is configured like so it expects that a Lua implementation dispatches requests to a list of peers; we did a deep dive in the Lua code used specifically by the ingress and found the issue.

This is the round_robin balancing algorithm implementation (the default used if not specified in configMap or annotation)

local _M = balancer_resty:new({ factory = resty_roundrobin, name = "round_robin" })

function _M.new(self, backend)

local nodes = util.get_nodes(backend.endpoints)

local o = {

instance = self.factory:new(nodes),

traffic_shaping_policy = backend.trafficShapingPolicy,

alternative_backends = backend.alternativeBackends,

}

setmetatable(o, self)

self.__index = self

return o

endIn here util.get_nodes(backend.endpoints) is passed the Endpoints API object from the Kubernetes API.

function _M.get_nodes(endpoints)

local nodes = {}

local weight = 1

for _, endpoint in pairs(endpoints) do

local endpoint_string = endpoint.address .. ":" .. endpoint.port

nodes[endpoint_string] = weight

end

return nodes

endThe problem with this code is subtle because it’s the kind of bug that can be noticed only at scale and it’s very difficult to be tested: the Endpoints JSON that comes from the Kubernetes API is always the same, for all ingress pods.

In Lua, tables are ordered implicitly by key and the pairs(endpoints) function will always yield the same key/value pair on every ingress instance; this means that if you have a number of (say 20) ingress instances behind a load balancer, all the first 20 requests will be routed to the first pod in the Endpoints list.

Here a graphical representation of the flow of the first N requests for an ingress deployment: given N the number of ingress pods, the first N requests all get routed to the first backend pod in the list.

This is not going to be a big issue for small scale ingress deployments and services: it only happens when the configuration is refreshed so when adding/removing pods from the deployment (e.g. during a deployment rollout). It represented a problem on one of our higher traffic services because with a high number of ingress pods the additional traffic that had to be managed by a subset of pods (the first ones in the list) was creating higher GC times, slower response and thus degrading the service.

Verifying

To prove our hypothesis we did 2 things:

- ensure that the configuration between multiple ingress was the same, with the same order of pod IPs in the backend

- experiment with a different balancing algorithm to see if the problem would be resolved

To verify ingress pods configuration we used the official troubleshooting guide. You can run a container locally on the workstation and connect it to the Kubernetes API with the following commands:

# after having run kubectl proxy in another shell...

docker run --rm -ti --name nginx-ingress-local --user root --privileged -v "$HOME/.kube:/kube" \

-e "POD_NAME=nginx-ingress-ctrl-upgrade-78d9b95ccd-abcd1" \

-e "POD_NAMESPACE=ingress-nonprd" \

registry.bravofly.intra:5000/application/nginx-ingress-controller:0.24.1-gcr \

/nginx-ingress-controller --kubeconfig /kube/config \

--default-backend-service=ingress-nonprd/default-backend-upgrade --configmap=ingress-nonprd/cfm-nginx-ingress-ctrl-upgrade \

--annotations-prefix=ingress.kubernetes.io --sync-period=10s --stderrthreshold=0 --logtostderr=0 --log_dir=/var/log/nginx --v=5

# with the container running, exec anoter terminal in it

docker exec --user root -ti nginx-ingress-local /bin/bash

# ensure that nginx is running in debug mode in this terminal session, and start the master

nginx -V | grep '--with-debug'

nginxFrom here you can dump the file using the gdb commands in the guide.

The same can be achieved with the running pods in the cluster first by getting a couple of pod IDs, then running

# get ingress pods

kubectl -n ingress-namespace get pods

kubectl exec -ti /bin/bash ingress-nginx-aaabbbccd-123ab

# once in the shell, get backends from the configuration URL

curl --unix-socket /tmp/nginx-status-server.sock http://fakehost/configuration/backendsYou can dump this to a file too and run kubectl cp. We did it and we proved our assumptions: the list of IPs was exactly the same! The shuffling bug had returned!

Fixing

The only way to address this issue right now is to switch to the ewma algorithm; you can do so by setting load-balance: ewma in the configMap passed as the ingress controller parameter or by adding the annotation ingress.kubernetes.io/load-balance: "ewma" in the Ingress Kubernetes definition YAML.

The difference in the two types of setting is that setting the algorithm in the configMap will set it globally for all ingresses while using the annotation you can pinpoint the algorithm into the desired namespace.

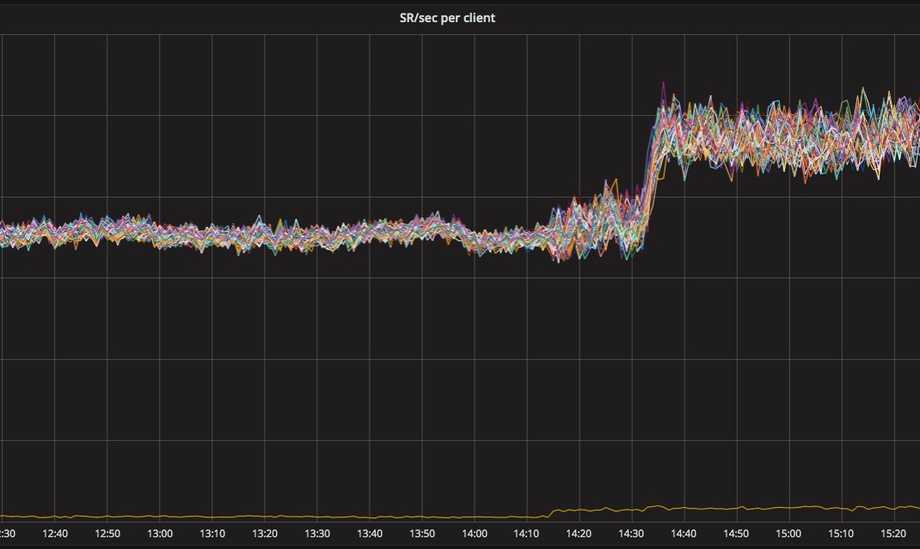

This graph shows the average of requests dispatched to every pod per second after we switched to the ewma algorithm.

In the graph:

- initially the

round_robinalgorithm is used, low standard deviation but no scaling in place - 14:10: the new

ewmais configured as annotation in the ingress - higher standard deviation as requests gets different weights

- 14:30: a service is scaled in with a reduction of 20% of the number of pods

- the deviation is under control so the service can handle it gracefully

ewma is a different balancing implementation that will generate a weight for every pod IP based on the last server response time, basically it tries to dispatch more requests to the backends that reply faster, supposing that they are less loaded.

As you can see from the graph, when using this algorithm we noticed a higher standard deviation then what we observed operatively with round robin, but on the contrary during the rollout of a deployment we don’t have a bigger deviation, it stands still! Our services are now safeguarded from ingress balancing DDoS.

Kudos

We are happy to say that the ingress-nginx project maintainers were super responsive on this issue and a patch has been already proposed in the library that performs round robin: the patch will make the round robin dispatch the first and subsequent requests starting from a random IP in the list, so even if the list is the same for multiple ingress pods, the first incoming in all pod request will be randomly routed to a backend. We hope the new library version will be included in the next release and that reading this blog saves you some headache while debugging your services!

Happy k8s’ing 😎!