Hybrid Cloud

lastminute.com platform is hosted by an on-premise data center and two public cloud providers: Amazon Web Services and Google Cloud Platform.

In 2020 we started the transition from a purely on-premise architecture to a “Cloud First” approach, to make the data center smaller, and redesign it in a cloud oriented infrastructure as code.

Why are we reviewing our data centres?

What we would like to do is to reduce our environmental footprint and improve efficiency.

To do that, we’re moving towards a hybrid hyper-converged datacenter, that is a mix of on-premise, public cloud, and edge environments.

And why multiple data centres?

The reason is that there are services that may heavily benefit from the scalability offered by the cloud, while other simply cannot be moved to the cloud due to technical (legacy software) or legal constraints (PCI, GDPR), or simply their cost in the cloud would exceed on-premise, for example dedicated GPU servers for machine learning.

Leveraging VMWare

However, in our on-premise datacenter, we’re not able to optimise the existing resources for many reasons: multiple technologies and methodologies and multiple different ways in how we’re allocating our resources. Some hardware is still used as physical servers, many virtual machines are based on old technologies, other hardware is used to host kubernetes’ clusters.

The idea is to leverage a single solution, VMWare, to consolidate all of our on-premise resources and remove dependencies on underlying hardware.

It is not a secret that hardware management is a real cost impacting both budgeting and provisioning forecasting.

On top of VMWare we’re going to run virtual machines, database clusters, kubernetes clusters, and in general any appliance to support modern applications. At this point we will be able to:

-

easily remove any superfluous hardware, thus reducing power consumption and carbon emissions

-

allocate the right workflow to the proper datacenter. Does a workflow work better in the Cloud? Let’s move it to the cloud, then! This is for example the case for all the services that require elasticity.

Native managed services on Cloud

On Cloud, we have decided to adopt native “managed services”, instead of building custom infrastructure where not strictly needed, in order to fully harness the advantages of the Cloud:

- Reduce time to build new infrastructure and offer new services

- Invest Engineering effort on optimisation and innovation rather than maintenance, updates, and management of low level outages

- Reduce time to recover from failures

- Reduce business risk, and easier compliance with industry standards

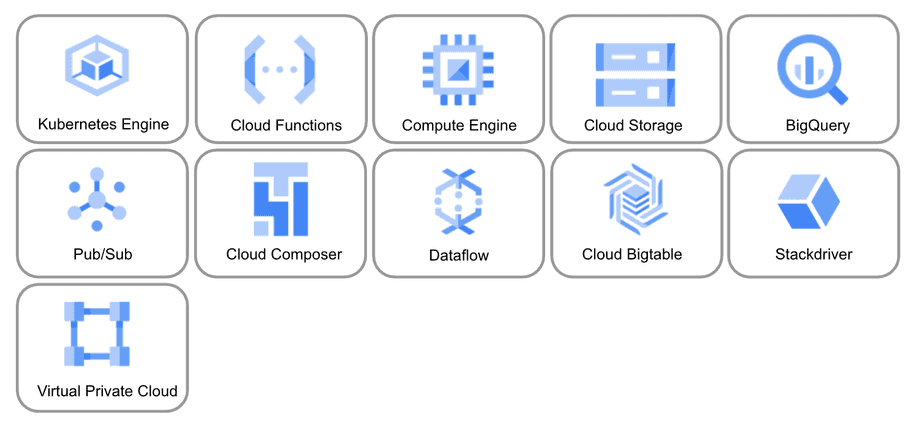

Google Cloud has been adopted mainly to build a scalable Data Lake and Data Analytics architecture based on managed services like Bigtable, Pub/Sub Dataflow and BigQuery. The platform is managed directly by our Data & Analytics team in a very effective way, without extra effort for the Operations teams.

Amazon Web Services was introduced several years ago in lastminute.com, and more recently, as result of a successful Proof Of Concept, we decided to heavily invest on it, and in the near future AWS will become our main Cloud solution.

We migrated from on-premise to the Public Cloud, the shopping search funnels of our Hotels, Dynamic Package and Flight products, where the “elasticity and scalability” embedded in the Cloud technology can unleash the biggest value for us.

We evolved the containerized services hosted on on-premise Kubernetes clusters (introduced back in 2016) with the adoption of managed services like EKS.

Autoscaling capabilities allow us to scale the resources fast and reduce them based on the traffic volumes without any downtime, achieving the best balance between cost and customer usage, rather than buying hardware that most of the time during the year is not fully utilised (cost-effectiveness).

Considering that our business is not only seasonal, but there’s a high variance between day and night: on average some applications use 30% of their capacity during the night, but require 3 times the standard capacity during peak hours.

Also, thanks to EKS and custom automation, the Kubernetes version upgrade process has been reduced from around 1 month to 3 days with zero downtime, controlled entirely by the CI/CD workloflow.

We are also able to build the whole platform from scratch in minutes thanks to the full automation (IAC and CI/CD), which is a huge improvement from a Business Continuity and Disaster Recovery point of view.

Databases

For many years, the strategy was to invest in MySQL relational databases by creating large shared Galera Clusters for different applications, managed by a central Datastore engineering team.

Investing in a single technology had the advantage of consolidating the skills of the Datastore Engineering team on MySQL, focusing on general database optimisations.

Unfortunately, this approach has created problems over the years, since different microservices were integrated directly through the shared databases, and in case of disruption of a single large cluster we had impacts on multiple business products.

More recently, we have extended supported technologies, introducing NoSQL technologies like MongoDB.

Our vision is to let engineers use the right technology for the right use case, self-use the platform delegating also part of Data management, and this is the reason why we are investing in self-service capabilities.

We are also moving forward by splitting large DBs into dedicated database models where each microservice has a dedicated schema, leveraging the integrations through REST APIs between different microservices.

Currently, we are migrating the Shopping Funnel databases from on-premise to AWS RDS in order to increase reliability and security, thus reducing the effort in maintenance and focusing more on business and optimisations.

Keeping our infrastructure safe

In addition to the scalability, reliability and security offered by cloud providers, we also rely on edge infrastructures such as Cloudflare to reduce latency and increase speed in delivering content to our end users. This also helps us keep our infrastructure safe from external threats such as bots, scrapers, DDoS, and potential vulnerabilities.

When it comes to B2B integrations (our offers are included in the results of meta-search engines such as Skyscanner, Google Flights, Jetcost) we rely on solutions like Kong API Gateway to support our API strategy and address concerns like authentication, rate limiting, throttling, analytics, and monitoring.

Want to discover more?

This is the sixth and last in a series of articles where we talk about our pink world. If you want to discover more, read: