Technology

Hotel Data Deduplication

Discover how incremental design and fast feedback cycles have played a crucial role in the evolution of the hotel deduplication feature, leading to enhanced customer experience and increase in productivity of the internal quality teams.

Hotel Data Deduplication

Duplicate Hotels: An Unwelcome Guest in the Hotel Booking Experience

Data deduplication is a critical process for managing data in the travel industry, particularly in the hotel sector. In essence, data deduplication refers to the process of identifying and removing duplicate entries of the same hotel within the dataset.

In our case, we’re dealing with importing and storing Hotel data, such as Hotel XYZ which needs to be stored in our system to be displayed on the lastminute.com page. We receive the information for the same hotel from multiple sources in different representations. Without a proper deduplication process, this can lead to duplicate entries, which can be particularly problematic in large datasets. For example: one provider can identify the Hotel Mayorazgo with this exact name but another one can refer to the same hotel by naming it as Hotel Mayorazgo Plus. In both cases we should consider it as being the same hotel. Also other similar meta-data need to be unified (such as images, descriptions, services and so on) under the same hotel to avoid repetitions.

Duplicate data can quickly accumulate, leading to increased storage costs, reduced system performance, and most importantly, reduced accuracy in the information displayed to the customer. This can result in a poor customer experience, leading to lost sales and damage to a travel company’s reputation.

So how does this work in real life?

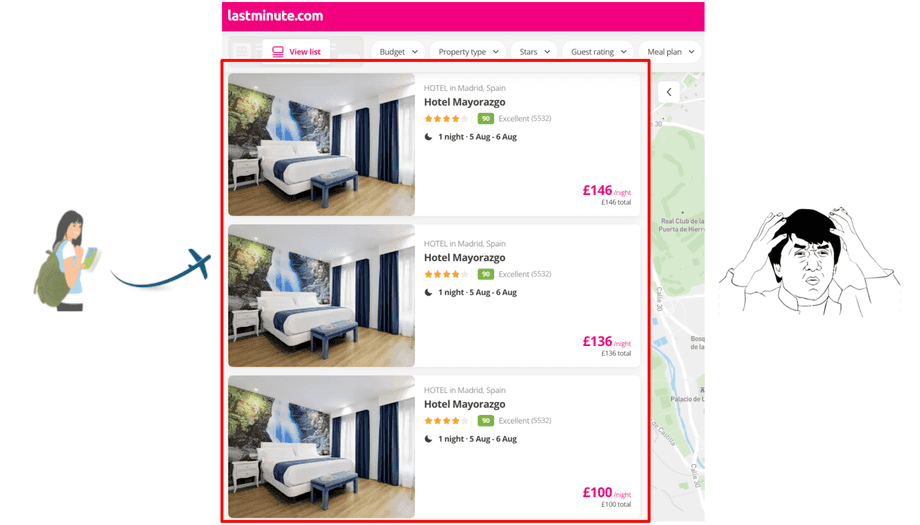

Imagine this scenario, Victoria, an enthusiastic traveler, is planning a trip to Madrid. She decides to book a hotel through our website, lastminute.com. As she starts browsing the available accommodations, she notices that the same hotels were appearing multiple times with different prices. After trying to understand the differences, she gave up, thinking about coming back to our webpage later on in the future.

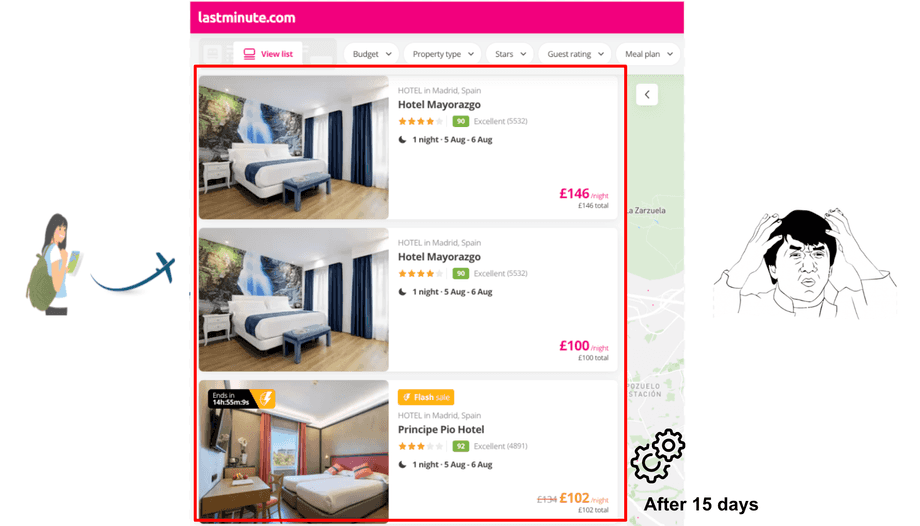

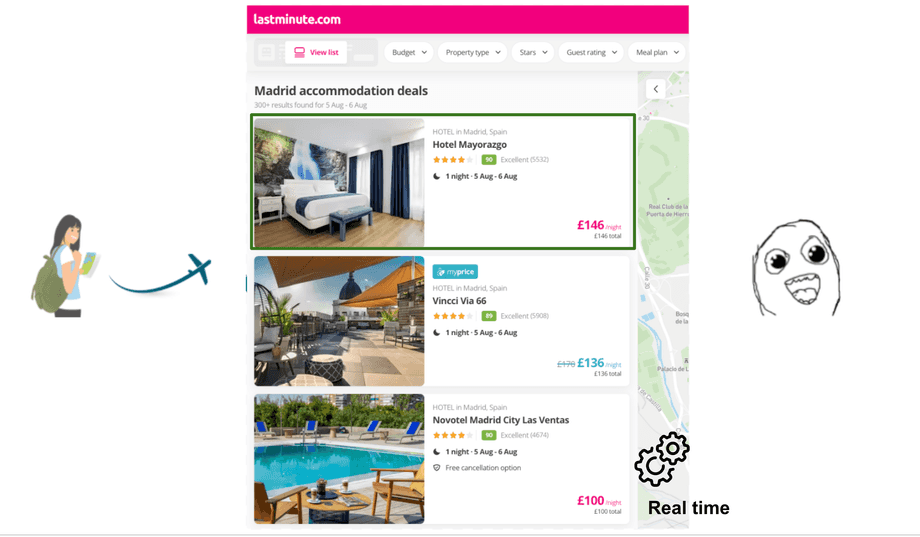

She decided to give it another try and came back to our webpage one month later. She is still determined to visit Madrid, and she makes the same search that she did one month ago. This time, she sees that there are fewer hotel duplications but the content on the webpage is still pretty confusing and she does not know whether to trust the company or not. lastminute.com does not look to be a trustworthy source from her viewpoint. She decides to give up and look for a more trustworthy source from which to book her accommodation.

But why are these duplicate entries still present? Let’s find out!

Behind the Scenes

Do you know what was happening behind the scenes during that month Victoria waited before returning to our website? We had implemented an algorithm to prevent displaying the same accommodation multiple times and enhance customer loyalty by removing duplicate hotels. However, there were significant issues with the execution of the deduplication algorithm.

Decoding Delays: Hotel Deduplication’s Influence on Real-time results

The deduplication algorithm took an astonishing amount of time, nearly half of the month to complete. The root cause was that the hotel deduplication code was part of a monolithic service that also housed other batch processes. Each hotel’s data was consumed by this service through HTTP API calls. As the dataset grew larger, this led to a performance overhead.

Scaling Woes: Challenges and Potential Data Loss

As mentioned earlier, the deduplication logic was integrated into a legacy monolithic service, making it challenging to selectively scale up and handle the high volume of API calls efficiently. This resulted in scalability issues and a risk of potential data loss.

Accuracy Matters: Role of Missed Deduplications

The deduplication algorithm is designed to process different representations of the same hotel from various providers and eliminate duplicates among them. Unfortunately, the previous algorithm lacked confidence and failed to remove all possible duplicates present in the system. Consequently, our customers opted to switch to a competitor that provided them with a greater sense of trustworthiness. This resulted in lost potential income and a decline in customers’ trust.

The Crucial Role of Real-Time Deduplication

When a customer searches for a hotel in a specific place, it is crucial to provide them with real-time, updated content information. This means that, even if the same hotel is provided by different suppliers, we should make it possible for the algorithm to process them as fast as possible. By ensuring that the customers see only one unique hotel, we enhance customer loyalty as well as provide the product at the lowest base price possible.

The Ideal Situation

The goal is to have a single representation of the hotel property that provides the best deal possible to the customer. However, the previous algorithm had two problems - it needs to be fast and have a low margin of error in the deduplication process.

Immediate Focus Areas

- Improve the Hotel deduplication speed

- Enhance the accuracy of hotel data

Hotel Deduplication Algorithm : a 10,000 ft overview

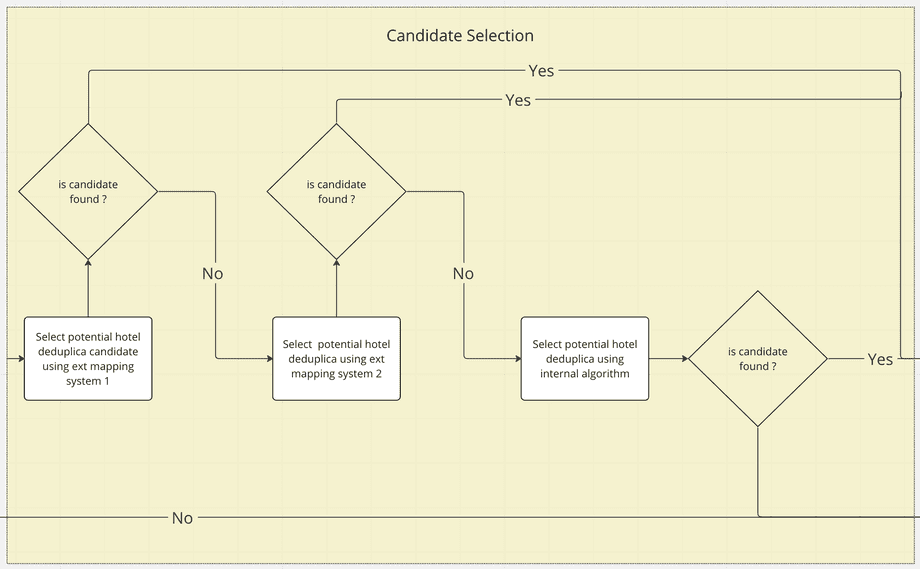

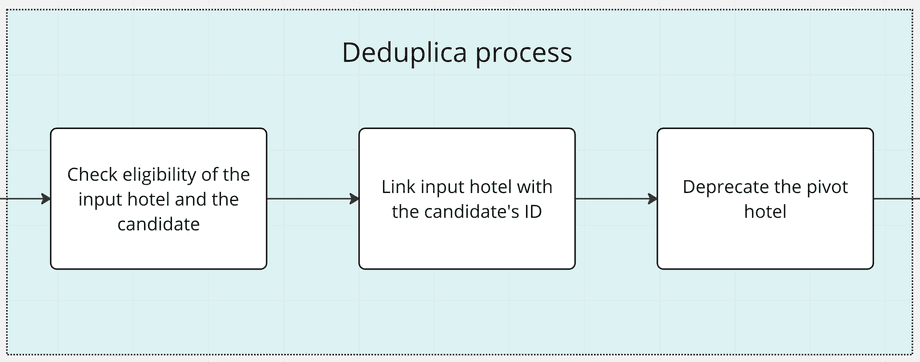

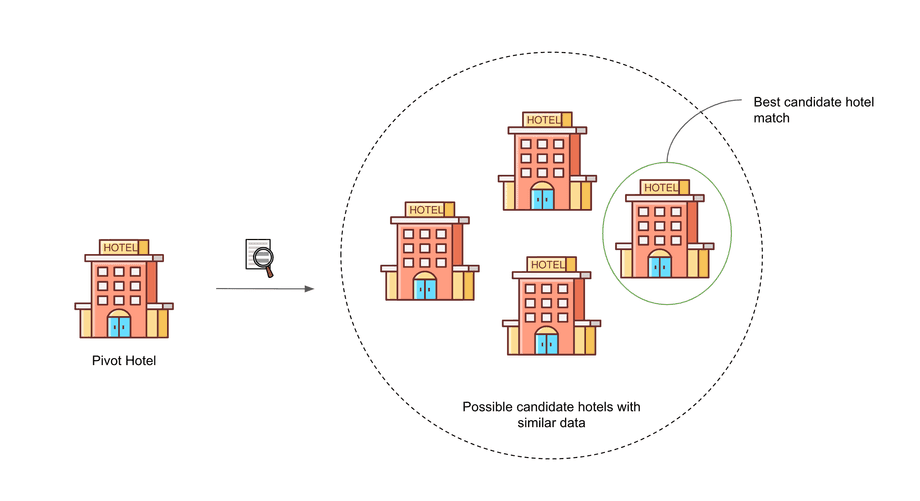

The critical step in the deduplication process is to identify the potential duplicate hotel with the latest details, which is known to be the candidate hotel. The hotel which is used as an anchor to find related duplicate entries is called the pivot hotel.

The Hotel Deduplication Algorithm

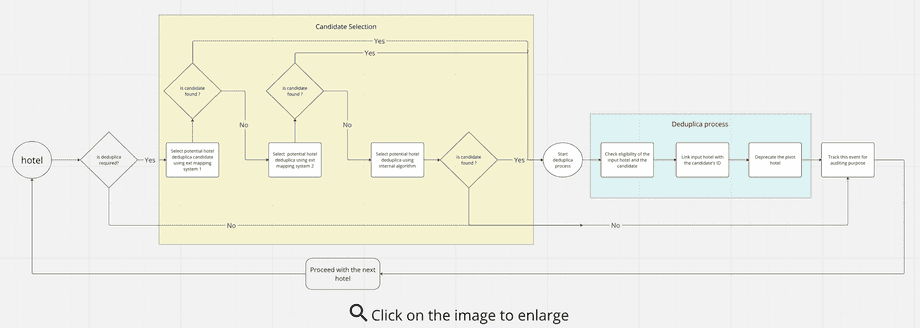

The below flow chart shows the Hotel deduplication process undergone by each hotel in the inventory.

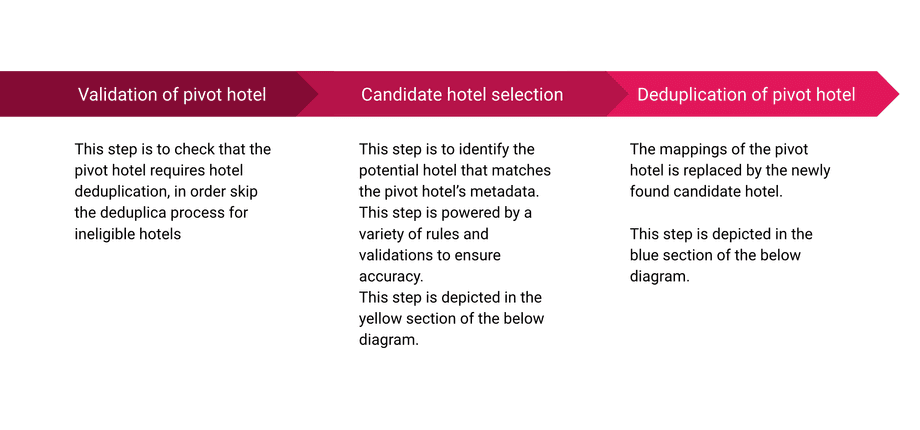

3 stages of the Hotel Deduplication process

Execution

Now that we gave an overview of the problem context and the outcome we intended to achieve, in this section we will go through the execution phase. We will explain how the work has been divided, the benefits of the feedback loop we built (involving different teams), and how we used metrics to plan the next steps.

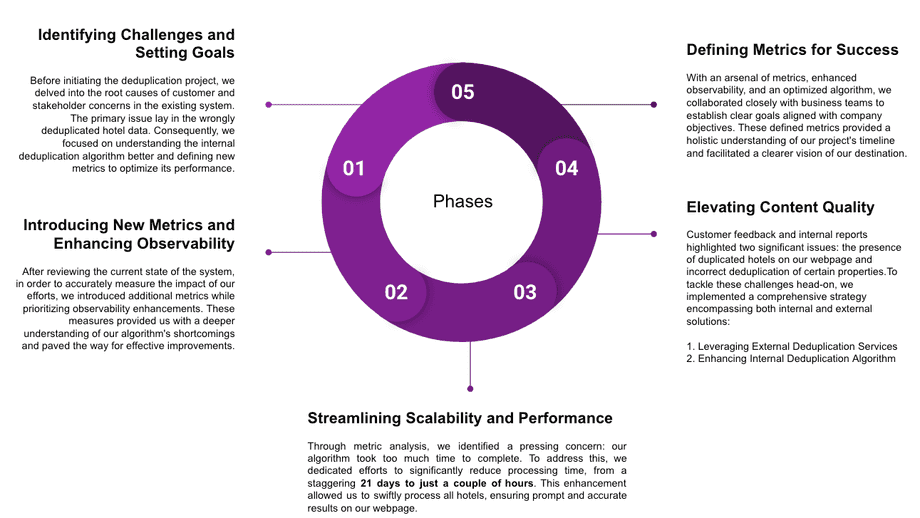

How was the work divided and executed in phases?

Before starting the work we analysed the information we had regarding the deduplication. After checking the metrics and data we had around the issues that arose, we could identify how we wanted to proceed and the milestones to achieve. Overall, we can summarise the division of the work in the following phases:

Benefits of the feedback loop with the quality team - faster feedback -> faster fixes

All the changes in the algorithms have been implemented thanks to the feedback loop we built among our team and different stakeholders: both internal and external to the company.

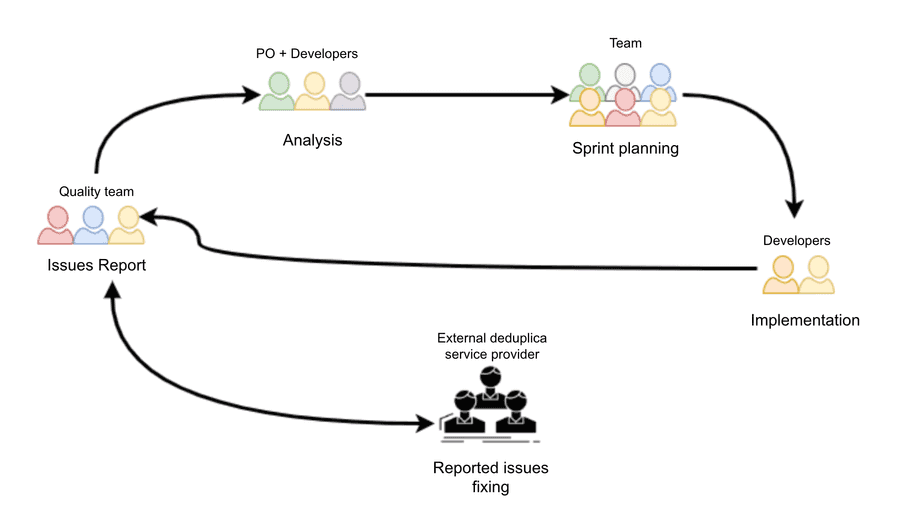

After several iterations on how to optimise the communication among different stakeholders to have the new feature/improvement live as fast as possible, we defined the following approach:

- Quality team was in charge of reporting the issues of the deduplication process both internally and externally. They got in touch with the external deduplication service provider as soon as they noticed some hotels were not correctly being deduplicated. So that they could fix the issues and we could improve our learnings of the deduplication algorithm. They got in touch with us when they found out about issues with our internal algorithm or things that we could improve to reduce the number of incorrectly deduplicated hotels. These meetings involved a member of the Quality team, the Product Owner, and two developers of the team.

- The Product Owner and the two developers of the team started to analyze the issue reported to gather more context and share it with the rest of the team during the Sprint Planning.

- In the Sprint Planning, all the members of the team got knowledge about the deduplication issue previously reported and analysed. Together, with the rest of the team, they reached an agreement on how to make the implementation and defined the technical level of complexity.

- Once the issue has been discussed among all the members of the team and put in the Sprint, one or two developers worked on it and made it available to production.

- The developers who worked on the task notified the Quality Team to validate the correct output.

We applied this feedback loop during the whole duration of the project and, thanks to the faster feedback approach we created, we were also able to produce faster fixes and make the whole team aligned with what was going on and when.

How did metrics/observability help us plan the next steps? Metrics-driven conversations

The feedback loop would have not been possible without good metrics and observability of what was going on in the system. Therefore, all the conversations we had between the different stakeholders were supported with data. For example, thanks to the analysis and metrics collected by leveraging an external mapping system, we were able to identify issues in our internal deduplication algorithm.

Taking into consideration this information, we could give the correct priority to the tasks and we could organize the work to be managed inside each sprint.

Results and Outcomes

So, after applying all the changes explained in the previous paragraphs, which have been the results we obtained? In this section we would like to share some of the results we got thanks to the improvements we made in the algorithm: both in terms of execution time and number of unique hotels we do have in our portfolio (we will name provider hotels for those hotels coming from suppliers and the term internal hotels to refer to the hotels we consider at the company level. For example, under the same internal hotel we could have grouped more provider hotels that we consider to be the same hotel).

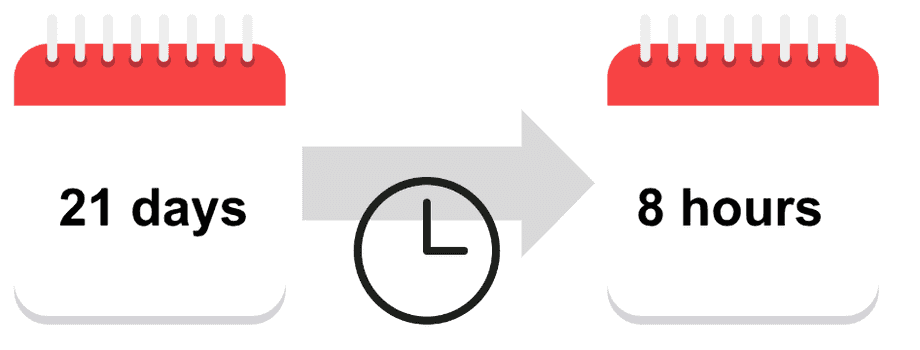

Execution time

After applying the changes in the algorithm, we have been able to vastly reduce its execution time from 21 days to 8 hours. This means the customer won’t need anymore to wait for 21 days before seeing the changes in the algorithm reflected on the page.

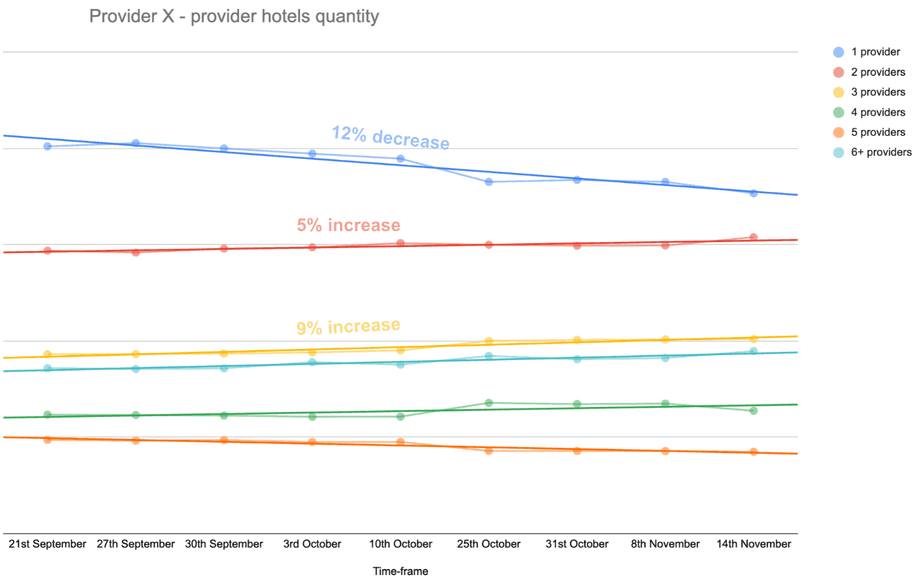

Number of internal hotels associated to only one provider

We also tried to estimate the improvements in the quality of the content, by trying to extract the number of internal hotels associated with only one provider. We supposed that there would be a big quantity of provider hotels associated with that specific internal hotel. By improving the algorithm, the number of internal hotels associated with only one provider would have decreased. Consequently, we would have seen increasing the number of internal hotels associated with that provider + another provider(s).

As you can see in the below graph, the number of internal hotels associated with Provider X has decreased over time. On the other hand, the number of hotels associated with Provider X and another provider is increasing. As well as the number of internal hotels associated with Provider X and two other providers, etc. This is due to the fact more provider hotels we are deduplicating, less unique internal hotels we will have. In this case, we do have during the time frame:

- Internal hotels associated with only Provider X: 12% decrease

- Internal hotels associated with Provider X and another provider: 5% increase

- Internal hotels associated with Provider X and the other two providers: 9% increase

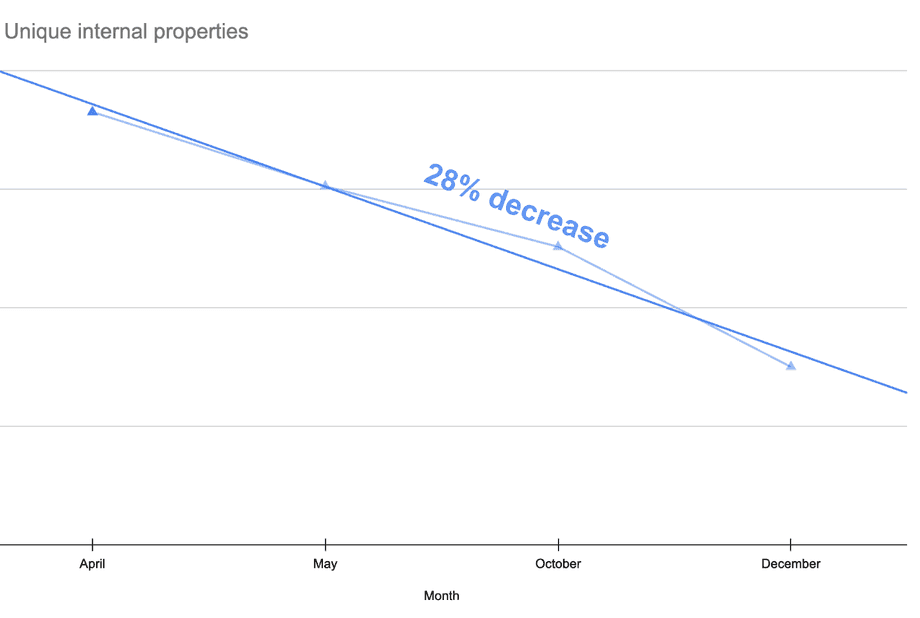

Unique internal properties

We also measured the number of unique internal properties we had between April and December, in correlation with the newly imported properties. As you can see from the graph below, even though we were importing new hotels, the number of unique properties was decreasing. Over time, the number of hotels being deduplicated started to decrease as a result of the improvements we made in the algorithm. Overall, we had a 28% decrease in the number of unique internal properties in our portfolio

Lessons Learned & Conclusion

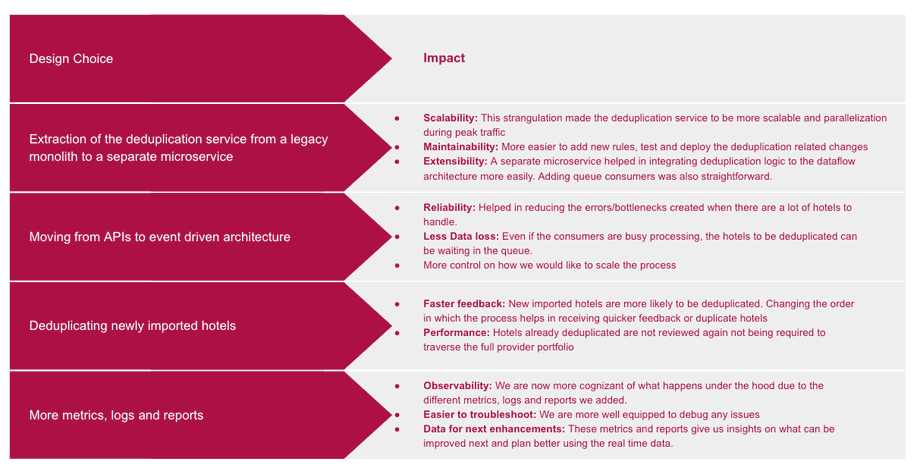

During the evolution of our internal hotel deduplication process, we were able to learn how certain design choices (big or small) could help in improving the overall performance/resilience of the system.

The hotel deduplication was a time-consuming process prone to errors due to the scale of data that needs to be processed and the series of calls that needs to be made for each hotel in the data set. Initially, one of the puzzles we had was whether migrating to an asynchronous event-driven architecture helps in reducing the bottlenecks created while processing huge data.

To test this theory, we broke down the steps required to make that migration. Below are the design choices we made along with their impact:

Takeaways

It is worth mentioning also: our achievements, the challenges we faced, and how we measured the impact. All these elements as detailed below, helped us improve and gather greater hands-on knowledge of the topic:

- What we did well:

- We continued improving the deduplication process until we reached a BAU state for the feature.

- The real-time feedback process we had with Quality team helped us better plan our next steps

- All the rules we introduced irrespective of their impact on the portfolio, left us with the satisfaction that we’re evolving the process to be better than what it was before

- We as a team started crunching data and became more metric-driven.

- Ability to leverage external systems to finetune our internal deduplication algorithm.

- Challenges:

- The dry run process was a manual time-consuming process and repeating it every few months meant we had to automate certain processes.

- Coordinating with different teams introduced some delays in the process

- The possibility of wrongly deduplicating hotels is high and risky. We had to base our decisions to avoid such scenarios

- Verifying and testing strategy for any evolution of the deduplication algorithm is difficult for the whole portfolio.

- Different types of accommodation and providers added another layer of complexity. For example, even if we correctly do the deduplication of our portfolio, tour operators are introducing duplicated results due to the same data stored in different formats in different sources.

- Measuring the impact:

- We generally measure different factors based on what are the changes going live in each run, like the number of hotels newly deduplicated, the assessment of the quality of the data sources (measure which source of data has the most duplicates, identify similarities/patterns in datasets with highest duplicates, identify which part of the algorithm has the most success in deduplicating), time is taken to process, reduction in errors

- Generally, we compare these factors before the actual run in production by using the dry-run mode and getting an opinion from the web quality team.

Conclusion

Hotel deduplication is a growing challenge for the travel industry and a total solution is yet to be found. Having an optimal deduplication system can help companies to bring lots of value to their customers, such as:

- Increase in customer loyalty by unifying information from different sources for the same hotel

- Increase the competitiveness of the company by providing the customer with the lowest base price possible and simplify backed data management due to a clearer data set

In our use case, we improved the accuracy of the in-house algorithm by leveraging an external provider that allowed us to introduce new deduplication rules. We introduced significant improvements in the performance, by speeding up the processing time of the algorithm. We also introduced observability and monitoring to have a better overview of the deduplication outcomes. Moreover, all the work needed synchronisation among different teams, from technology to business, from product to quality. This was necessary to have a continuous feedback loop among stakeholders who own a different knowledge in the domain.

All the achievements we collected would need to be strictly analysed so that future actions can be taken into consideration. Possible future improvements could be:

- The usage of a data-flow architecture to make the results of the deduplication available to our customers in an even more “real-time” manner. We will be able to deduplicate at the same time new hotels are being imported

- A Machine learning approach

- Provide to Quality Team with the results of the deduplication

There is still lots of work to do in the hotel deduplication world. Different approaches are being used and new technical solutions are being developed and adopted by companies. They are trying to optimise their algorithms so that they can achieve 100% quality accuracy.

Would the travel sector, one day, be able to reach such an optimistic result?

Credits: many thanks to Static Shock, Supply Quality and Thoughtworks teams for contributing to the success of this project.

Read next

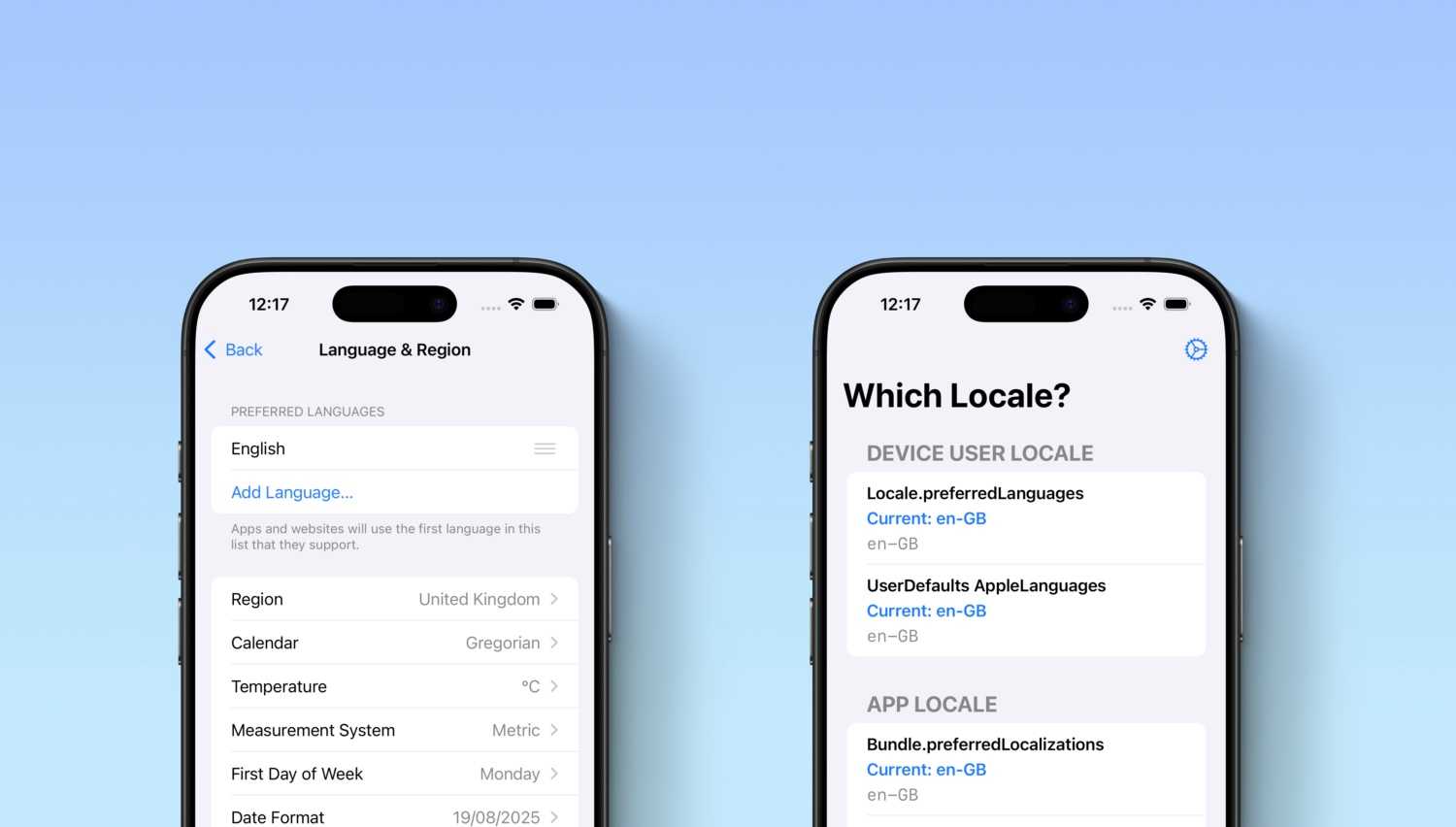

Which Locale? Decoding the Madness Behind iOS Localization and Language Preferences

iOS localization is a wild ride where device and app locales play by their own rules. But don’t worry, after some chaos, Apple’s settings actually matched our expectations. Of course, only after a few twists and turns [...]