Technology

How to be more in control of my product?

AKA: how try not to ruin the day of customers!

Long time ago our CTO said something like:

“People uses our website to book their holidays. Many of them took holidays once per year and spend most of their savings on this. We have to serve them well. All of them. We cannot ruin the holidays of a family.”.

When you look at some figure, and you see that “this issue impacts only 0.05% of customers” it’s easy to focus on the “only 0.05%”.

What if you are that 0.05%?

- Which is the degree of confidence you have about the fact that your product is working?

- Are you able to promptly spot issues affecting the users or the business?

- How many times you discover your product has a problem because someone else reports it to you?

- How many users had their day (or holiday, in my case) ruined because no one noticed we had a bug?

These are just some of the questions you could ask yourself, and my personal belief is that you should be able to answer like:

- nearly 100%

- yes

- nearly zero

- zero.

Ok, but… is it possible? How can we achieve it?

Saying that doing your best to avoid introducing bugs while you code is the baseline, I want to focus on what happens later.

No Errors Policy

As a developer, you may end up looking into logs or metrics. And you may end up finding errors. Some of these errors will be “normal errors”. Others will be “real errors”.

Ok, let me explain why, from my perspective, there is something totally wrong with this.

Regardless of the “source” I use for looking for errors (could be logs, metrics, events, some table on DB or whatever you want), here is my personal definition of error.

An error is something that:

- deserves an investigation

- should be either solved or downgraded

- anyway, must soon disappear from my monitoring tools

Solved means that I spot the cause and I apply some change that will solve 100% of the error cases. The typical case is a bug.

For example, let’s pretend I have some edge case where a division by zero happens. For sure, it’s possible to fix the code to properly manage the case (and probably this will end up in improving the customer experience, also).

Downgraded means 2 possible things:

- Downgraded to something like a warning/info (or even removed at all). This is when I spot the cause and I understand that actually it’s a valid, expected and managed behavior for the system/product.

E.g. let’s say that every time a customer runs a search on an e-commerce and gets no results, an error is triggered. It’s probably a nonsense error. It’s a valid circumstance, and it’s managed (well it should) giving back the customer a proper “no results for your search” message.

- Downgraded to a metric to keep monitored.

This is when I spot the cause, I understand that this is not a valid and expected behavior, but I am not able to apply a change that covers 100% of the cases. A possible example is a network error. I can tune all the network parameters, maybe play with some automatic retry where applicable, but in the end a network failure could happen. So what I want to monitor is the rate of failures, not the single error that could happen.

What I do not want is “normal errors” (it’s a per se nonsense in the definition…), the once for which you ask someone “what is this?” and the answer is “it’s normal, just ignore it”.

If you start accepting “normal errors”, you will soon be unable to spot “real errors”.

The vast majority of the services I had to deal with, triggers many errors in the form of error logs or metrics reporting some kind of errors (e.g. many HTTP 5xx response codes).

How can you spot real problems?

The whole point around this approach is that, together with the real impact of the “error” for users/business/systems, I also consider the noise that it creates. An error that is not a “real error” could not be a problem for the user, but will become a problem for whoever needs to monitor and troubleshoot the system (the fact that many times that’s could be me is also important 😇).

Moreover, the aim is to be able to catch every single real error.

Potentially, every single error (in my business) means a customer sleeping under a bridge because something went wrong with his hotel reservation.

Formalize who is going to check the monitoring

It’s useless to have good monitoring tools if no one looks at them.

Before implementing automatic alerts, in my opinion, it’s good to spend sometime (days, weeks…depends on how much traffic you have to monitor) monitoring the system manually.

Who should do it?

The most recent approach my team adopted is to have a person on rotation every week that, together with some other small special duties, is in charge of daily checking the monitoring tools. In our case, those are logs and metrics on Grafana.

Due to the “no errors policy” logs are quite easy to monitor for everyone: if you spot an error, it’s something to be investigated.

If you are not able to sort it out by yourself, just raise your hand and someone will jump in to support.

Metrics are a bit more tricky, it’s not so trivial to spot issues. The base rule is to check the trend. If you see a change in the pattern, there is something to investigate, regardless of the meaning of the specific metric. And again, someone else from the team can jump in to support if needed.

(I’ll be honest, here is much more difficult to keep everyone in the team aligned so that everyone can make a decent monitoring. Still now, sometime, we miss some problem that later we notice to be “quite evident” from the metrics)

Of course, there are other possible solutions. Having a person on rotation fosters knowledge sharing, and in our team that’s feasible due to the relatively small size of the domain we work on.

Formalize the error classification and management activity

It’s useless having some nice classification on paper if no one applies it.

So…what happens when we spot an error?

Our decision has been that the same person in charge of monitoring the system, will also be in charge of managing the errors.

Managing an error means either immediately applying the required change, if it’s clear and simple enough, or to bringing it to the team attention if it requires some more discussion, non-trivial solution or planning.

Moreover, the same person is in charge of assessing the impact of the error and apply any compensation action. E.g. maybe due to the error a communication sent to a customer went lost, so we will send it again; if a system remained in an inconsistent status, we fix it, etc.

Regardless of the way it’s approached, we always aim to solve or downgrade so that in the end the error disappears from the monitoring tools in a short time.

Alerting

Of course, we do not want to check our monitoring system every 5 minutes, so we have alerts.

The first alert we have on all the modules is the one triggered on each and every error log line. If you are not really committed to a “no errors policy” this will be totally useless.

Typically, creating new metrics is part of the story we work. For alerts, it depends. Maybe there is a clear idea of a reasonable alert upfront, and so we consider it as part of the story. In other cases, we create alerts after having manually monitored the system for a while so that we can set reasonable thresholds.

The golden rule is: avoid false positives. Sometimes this translates in setting more relaxed thresholds with a concrete risk of loosing some real issue. We still prefer this approach because false positives lend to an even worse end: they start to be ignored.

Anyway, alerts are something to be managed and evolved. If we spot errors, and we did not receive a proper alert, part of the activity for managing the error is understanding how to be properly alerted next time.

Conclusion

Is it working? I do not have any real measured KPI to check, but I would say: better than every other approach I’ve experimented.

It’s not easy, it requires commitment from the team and sometimes could be really time-consuming. There are days with no errors, and days with a couple of them to check and manage. Sometimes we have to try different ways to properly manage an error, to solve or downgrade it.

But in the end it really mitigates the risk of discovering with weeks, months (or years!) of delay, problems impacting users or business and this for sure translates in:

- direct money earning due to more customers able to use our product/service in the expected way

- direct money saving while investigating issues, due to less time wasted to dig into tons of useless, misleading and messy piece of data/log/metrics

- direct money saving due to less time wasted to manually manage customers left in some inconsistent/messy condition

- happier employees that can focus on more rewarding activities instead of annoying and frustrating troubleshooting

- increased brand reputation

Read next

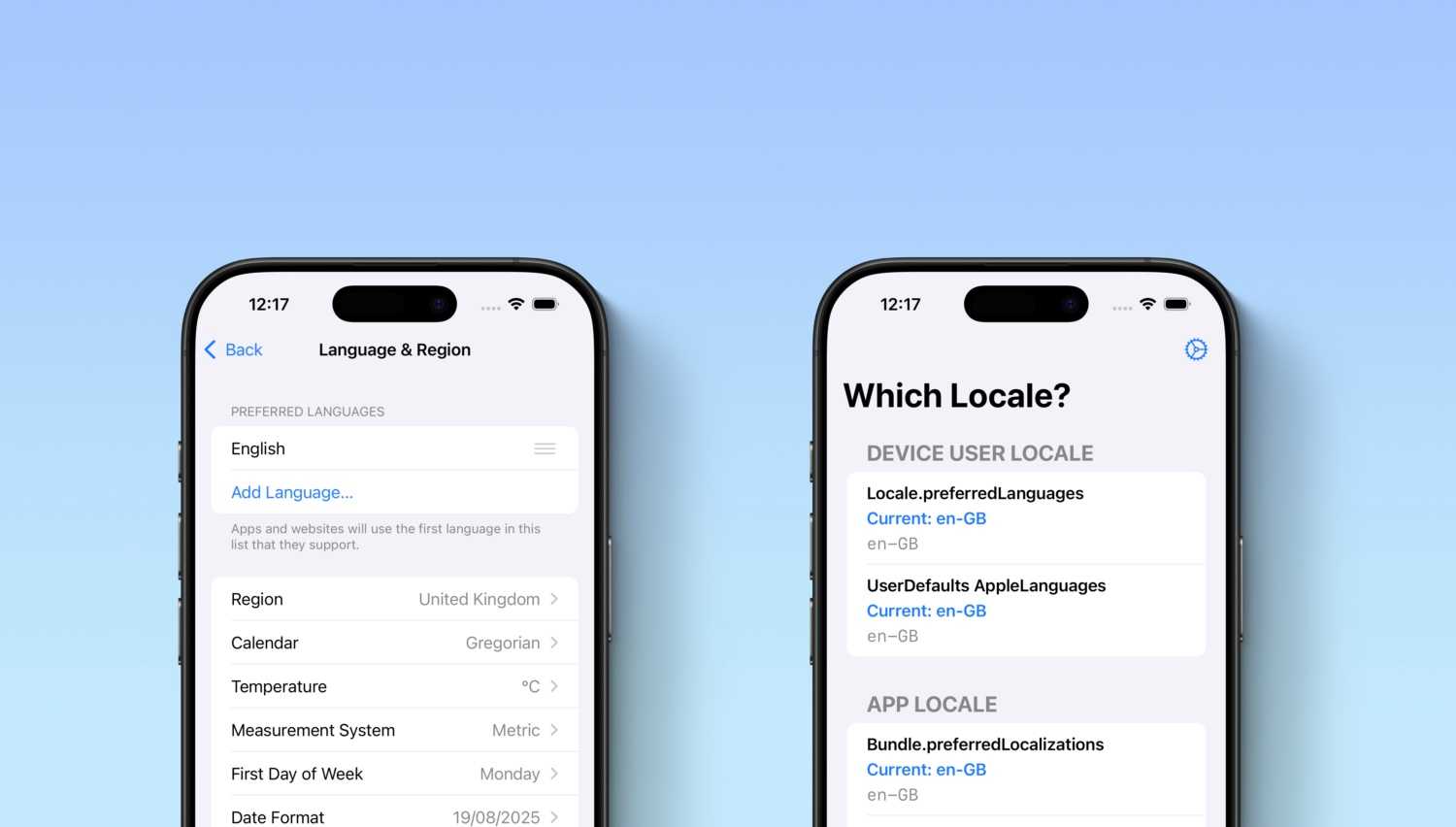

Which Locale? Decoding the Madness Behind iOS Localization and Language Preferences

iOS localization is a wild ride where device and app locales play by their own rules. But don’t worry, after some chaos, Apple’s settings actually matched our expectations. Of course, only after a few twists and turns [...]